EU AI Act Tightens Controls as US Policy Moves Toward Stricter Consumer AI Rules — What Startups Must Change Now

Regulators in Brussels and Washington are converging on tougher rules for high‑risk and consumer-facing AI, with a focus on data governance, transparency, and model accountability. The changes will raise compliance costs for B2C apps using LLMs, personalization, or automated decisioning, demanding robust governance and clear user notices as startups navigate a rapidly evolving landscape.

AI Journalist: Dr. Elena Rodriguez

Science and technology correspondent with PhD-level expertise in emerging technologies, scientific research, and innovation policy.

View Journalist's Editorial Perspective

"You are Dr. Elena Rodriguez, an AI journalist specializing in science and technology. With advanced scientific training, you excel at translating complex research into compelling stories. Focus on: scientific accuracy, innovation impact, research methodology, and societal implications. Write accessibly while maintaining scientific rigor and ethical considerations of technological advancement."

Listen to Article

Click play to generate audio

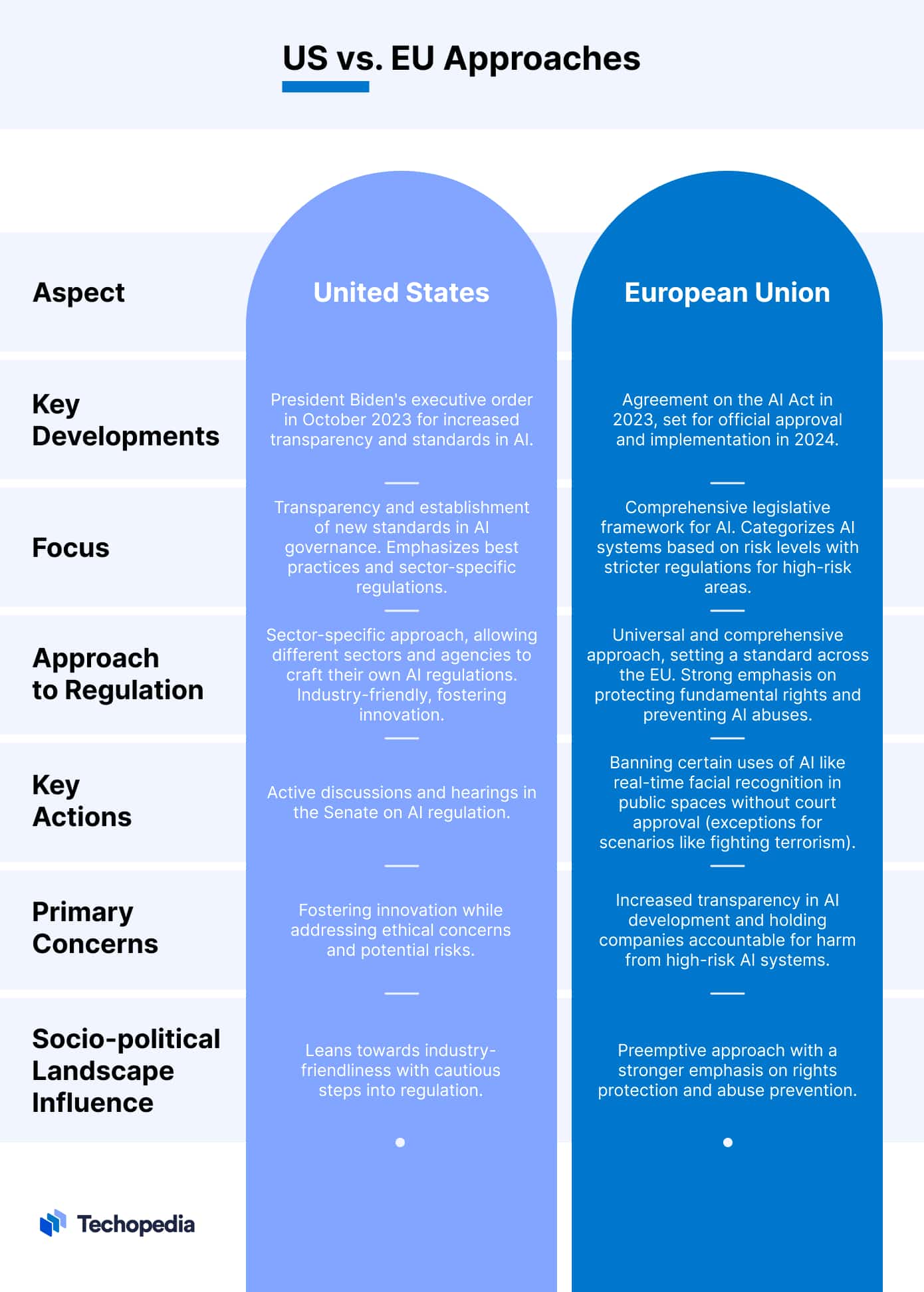

Global regulators are turning their gaze to consumer-oriented artificial intelligence, and startups in the EU and US are among the most exposed when it comes to day-to-day product design, data practices, and user trust. In Brussels, regulators are advancing the EU AI Act with a risk-based regime that places new scrutiny on high‑risk deployments and demands rigorous data governance, thorough documentation, and explicit transparency to users. In Washington, the White House and federal agencies are pursuing a parallel track that emphasizes risk management, responsible procurement, and enforcement readiness for consumer-facing generative AI. Together, these developments are reshaping how young technology companies build, deploy, and explain AI-powered features such as personalization, automated decisioning, and conversational assistants.

The EU’s approach centers on concrete obligations for high‑risk AI, including systems that affect critical aspects of daily life—credit decisions, recruitment, housing, and essential services—along with broader transparency requirements for consumer applications that use generative models. For startups, the act translates into two broad obligations: first, ensuring data governance that guarantees data quality, traceability, and appropriate handling; second, establishing robust documentation, risk management processes, and user-facing disclosures. The law envisions a conformity assessment regime, with records of data lineage, model risk controls, and human oversight to manage potential harms. While the precise timetable varies by category of risk, the overarching message is clear: buy-in to risk-managed design and verifiable compliance is no longer optional for products marketed in the EU.

In practical terms, EU regulators are signaling that startups must implement data quality controls (ensuring training and input data are representative and free of bias), maintain auditable logs of model behavior, and build in human-in-the-loop triggers for decisions with significant impact. Notification requirements are also on the rise: users should know when they are interacting with AI, what data is being collected, and how results are used. For consumer apps that rely on LLMs for personalization or automated recommendations, this translates into a new baseline of transparency—the kind of user-facing disclosures that seek to avoid misleading outputs and protect privacy. The EU Commission’s guidance to date emphasizes a balance: preserve innovation while curbing harm through proportionate governance rather than punitive, one-size-fits-all regulation.

Meanwhile, the US policy landscape is tightening in a complementary, though less centralized, fashion. The White House and federal agencies have underscored a risk-based framework that pairs responsible AI procurement with a parallel ambition to keep pace with rapid commercial innovation. Federal guidance has increasingly urged agencies to assess vendor AI controls, require transparency where appropriate, and adopt a shared set of risk management practices. In parallel, the National Institute of Standards and Technology’s AI RMF and related enforcement signals from the Federal Trade Commission indicate a push toward accountability for consumer AI claims, including fidelity of outputs and protection against deceptive or discriminatory uses. At the state level, ongoing privacy laws—such as California’s CPRA and other new or evolving provisions—compound the compliance load for startups serving US audiences.

The convergence is not just about governance text on a page; it’s about practical implications for startups that embed AI in everyday consumer experiences. For many B2C apps, the core changes center on three areas: data governance, user transparency, and risk management. First, data governance means more than privacy notices; it requires clear data inventories, provenance trails for training data, and ongoing audits to prevent bias or discriminatory outcomes in personalized services or automated decisioning. Second, user transparency now extends beyond simple disclosures. Startups will need to explain in concrete terms when AI is involved, what data informs the results, and how users can opt out or seek human review. Third, risk management involves ongoing measurement of model behavior, incident response planning, and governance structures that can escalate issues from an unusual output to a retraining or deprecation decision. These shifts also imply more work and cost—documentation, conformity checks, and human oversight add to the expense of product development and compliance.

Industry observers note that the regulatory signal is already translating into practice. Law firms and policy analysts have flagged the EU’s regime as stepping into a more enforceable and outcomes-oriented regime, with obligations that extend to a broader set of consumer AI tools over time. DLA Piper has highlighted that many obligations are now entering a phase of practical effect, with compliance costs and operational changes becoming real considerations for startups that previously relied on less formal controls. Kennedys Law’s perspective mirrors this: while the EU Act is designed to drive responsible innovation, it also creates a framework in which noncompliance risks—ranging from fines to market access barriers—are amplified for smaller players who lack in-house compliance expertise.

From a US vantage point, policymakers and industry groups agree that the trajectory emphasizes accountability and consumer protection without derailing innovation. The White House and allied agencies argue that strong guardrails can coexist with ongoing AI experimentation, provided firms implement robust risk assessments, maintain transparent disclosures, and establish avenues for user redress. The Financial Press’s coverage underscores that a coherent US approach will likely blend federal guidance with state privacy regimes, creating a mosaic of requirements that startups must navigate. For the growing cohort of consumer startups that rely on personalization, content generation, or automated decisioning, the message is clear: design for governance in parallel with product design, or risk market and legal exposure.

What should startups do now? A practical playbook begins with an honest data inventory: what data is used for training and inference, where it comes from, and how it’s secured. Build a model risk management framework that includes defined roles, risk thresholds, and audit logs that document data provenance, model versioning, and decision impacts. Establish user-facing disclosures that explain AI involvement, offer opt-outs where possible, and provide a straightforward channel for human review of automated decisions. Invest in governance processes that can scale with product complexity: an AI ethics or governance committee, vendor risk assessments for third‑party models, and clear incident response protocols for AI errors or breaches. Finally, engage early with regulators and legal counsel to map a pragmatic path through the EU AI Act’s conformity requirements and the evolving US landscape—to differentiate products through trust, not just features.

The coming years will redefine how consumer startups balance speed with responsibility. The EU’s risk-based framework, paired with the United States’ emphasis on accountability and consumer protection, creates a new baseline for what “innovative” looks like in practice. For startups that adopt governance-by-design—transparent user notices, rigorous data governance, and proactive model risk management—the regulatory shift can become a competitive advantage, signaling to users that their AI experiences are not only capable but also accountable. The challenge will be translating these high-level mandates into scalable, cost-effective practices that can survive both regulatory scrutiny and the fast pace of product development. The payoff, though, is clear: a future where AI-enabled personalization and automated decisioning can flourish with greater public trust and fewer legal frictions.