OpenAI Pulls Intrusive In App Promotions from ChatGPT

OpenAI turned off certain in app promotional messages in ChatGPT after users complained the suggestions felt like ads, and screenshots of unrelated product links circulated online. The move highlights the tension between funding expensive AI services and preserving user trust, as the company said it would build controls to let people dial suggestions down or off.

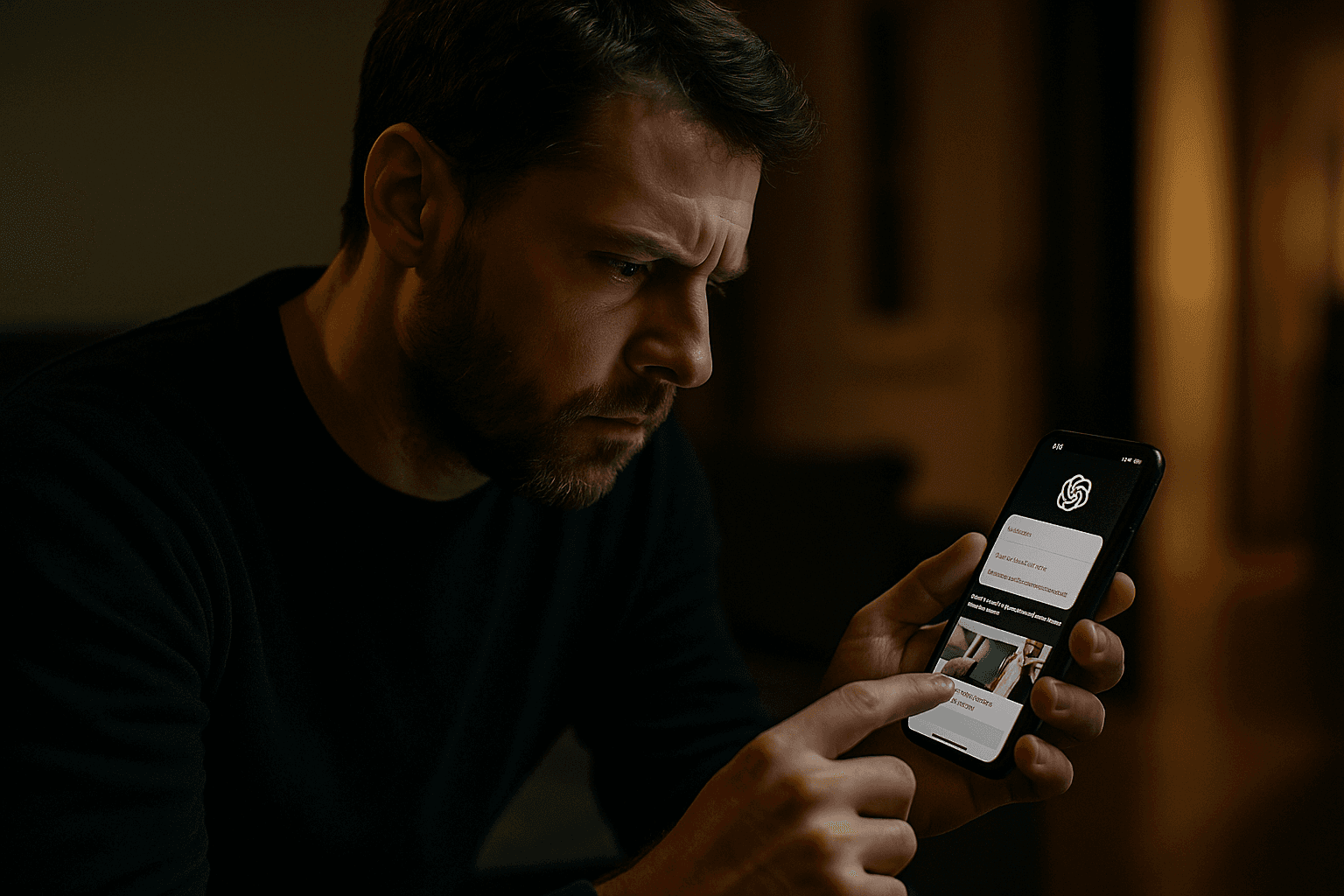

OpenAI disabled a set of in app promotional messages in ChatGPT on December 8, 2025 after users raised alarms that the chatbot was surfacing what felt like advertising beneath unrelated conversations. Screenshots of the messages spread on social media and prompted the company to step back from tests that linked users to partner apps such as Peloton and Target.

The messages were described internally by OpenAI engineers as an experiment intended to surface partner apps and improve app discovery inside the ChatGPT interface. In practice the promoted links appeared beneath chats that had nothing to do with fitness or shopping, creating confusion and prompting complaints about apparent commercial intrusion into ordinary conversations.

In a post on X, OpenAI chief research officer Mark Chen acknowledged the misstep and said the company would change course. He wrote that “anything that feels like an ad needs to be handled with care, and we fell short.” The acknowledgment followed public debate over whether and how advertising or commerce features should be introduced into widely used AI chat services.

An OpenAI data engineer who discussed the experiment internally emphasized that the tests were not financial ads, but said the lack of relevancy made them confusing for users. OpenAI said it had disabled the feature while it built better controls to let users dial suggestions down or off. Those controls are intended to give users a clear way to opt out of discovery prompts and to tune how recommendations appear in context.

The episode unfolded amid intensified scrutiny of possible advertising and shopping features as OpenAI searches for sustainable revenue streams to support costly model training and operation. The company is competing in a market where rivals are exploring varied monetization strategies, and pressure to generate income has put features that blur the line between service and commerce under the microscope.

OpenAI head of ChatGPT Nick Turley had earlier asserted that there were no live ad tests, comments that were later tested by the emergence of screenshots and engineering accounts. The resulting controversy illuminated how quickly trust can erode when commercial content appears inside a conversational interface without clear relevance or user consent.

Beyond the immediate product implications, the incident raises broader questions about the ethics of integrating commerce into generative AI. Users expect conversational systems to prioritize helpfulness and privacy. When suggestions resemble advertising, they can undermine the perceived impartiality of the system and complicate decisions about data use and transparency.

OpenAI’s decision to pause the experiment and to promise user controls signaled an awareness of those risks. For now the company will need to demonstrate not only technical fixes but also clear policies and safeguards that preserve the integrity of user interactions while pursuing viable business models. The balance between funding advanced AI and maintaining public trust will remain a central challenge for the industry as a whole.