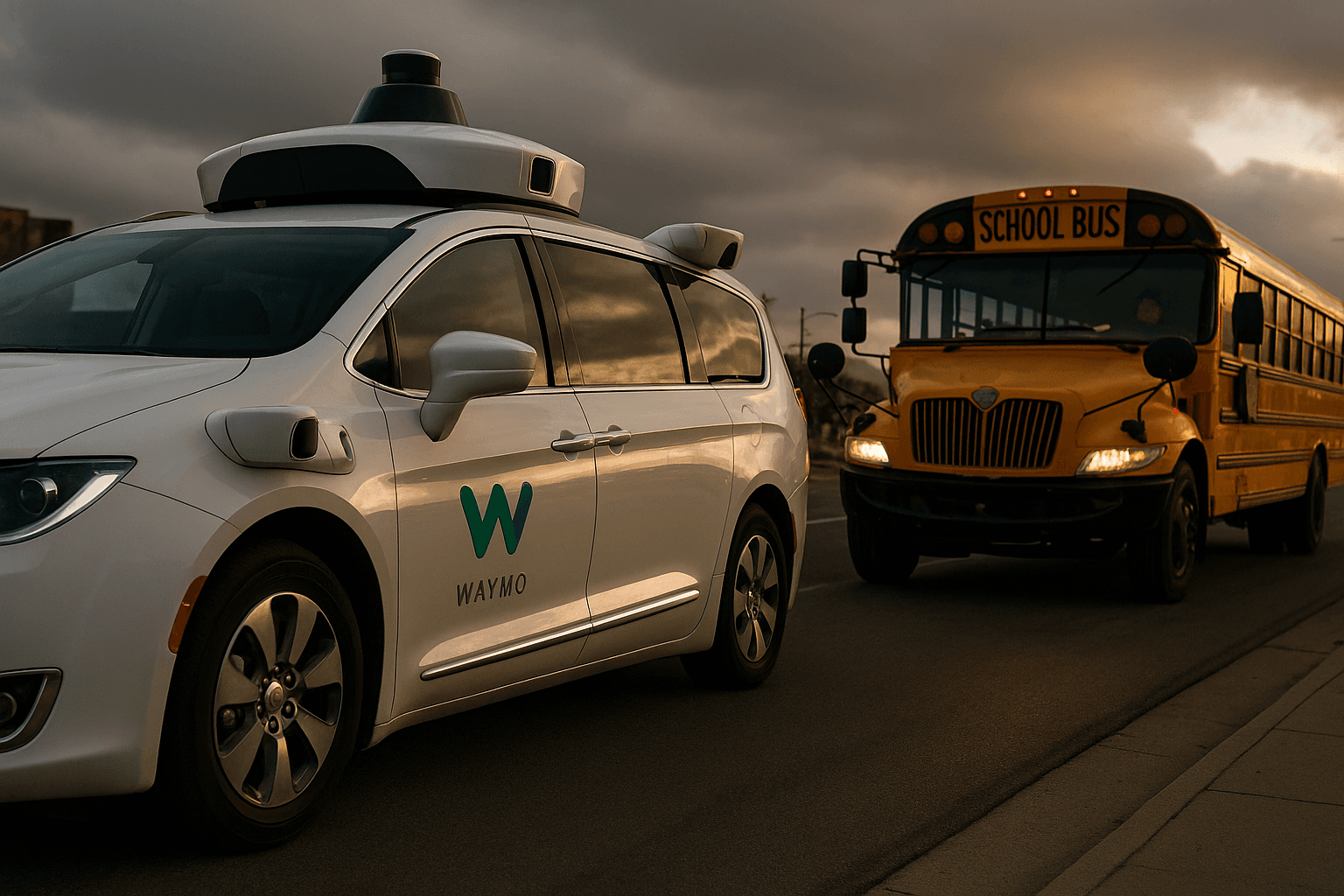

Waymo to File Voluntary Software Recall After Bus Passings Revealed

Waymo said it will file a voluntary software recall with federal regulators after an investigation found its vehicles passed stopped school buses in multiple jurisdictions, a development that raises fresh questions about the safety of autonomous driving systems. The move will require a fleet wide software update, and regulators say they will continue to monitor compliance and the company’s remedial steps.

On December 7, Waymo told federal regulators it would file a voluntary software recall to fix a software issue that contributed to several incidents in which Waymo vehicles passed stopped school buses. The recall follows a National Highway Traffic Safety Administration investigation into a cluster of illegal bus passings reported across multiple jurisdictions, and comes as autonomous vehicle technology faces heightened scrutiny after a series of high profile safety episodes.

NHTSA opened the probe after receiving reports that Waymo vehicles had failed to stop for school buses displaying stop arms and flashing lights, a violation of traffic laws in most states. The agency sent detailed questions to Waymo and set a deadline for the company’s responses as investigators sought to understand how the vehicles’ perception and decision systems processed the scenarios. Regulators said the planned recall would require a software update to Waymo’s fleet, and that NHTSA would continue to monitor compliance and evaluate the company’s remedial steps.

Waymo acknowledged in regulatory filings that its software exhibits certain edge case behaviors that need correction. The company said in its notice that no injuries have been reported in the incidents under review. The firm did not disclose the total number of incidents tied to the probe or specify the jurisdictions involved, citing the ongoing nature of the investigation.

The recall is voluntary, meaning Waymo initiated the process rather than being compelled to do so by NHTSA. Still, the action signals a rare public capitulation by one of the most advanced autonomous vehicle operators to correct a behavior that endangers a particularly vulnerable group of road users. School buses evoke intense public concern because of the concentration of children and the clear legal obligations drivers face when buses are stopped to load or unload passengers.

Technically, the problem described by regulators and Waymo fits a broader challenge for autonomous systems. Edge cases are the rare, ambiguous, or novel situations that machine learning models and rule based systems struggle to classify and respond to reliably. Correcting those behaviors often requires not only software parameter changes but also additional training data, new sensor fusion strategies, and more conservative operational rules in certain environments. For a fleet operating in real time on public roads, rolling out and verifying such changes is a complex logistical and engineering task.

The recall also underscores the evolving relationship between regulators and companies developing autonomous technology. NHTSA’s involvement illustrates an enforcement mechanism that pairs investigation with oversight of remedy implementation. For Waymo, which has long positioned safety as a central selling point of its technology, the recall may complicate regulatory approvals and municipal relationships at a time when public trust remains fragile.

As NHTSA evaluates Waymo’s corrective plan, the outcome will be watched by cities, school districts, and other autonomous vehicle operators. The episode highlights the dual imperative facing developers and regulators: to innovate while ensuring systems behave predictably in all but the rarest of circumstances, especially when the safety of children is at stake.