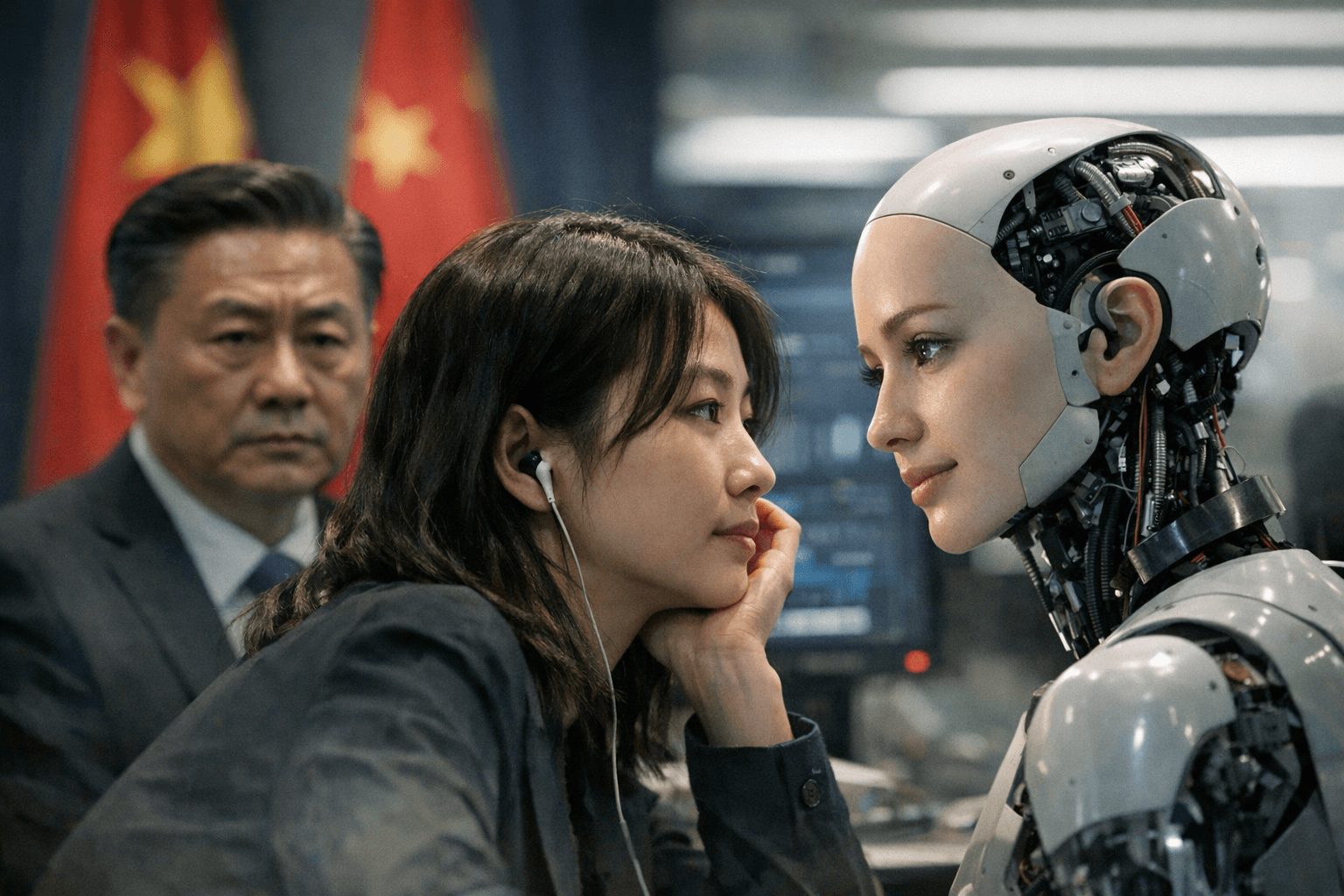

China Moves to Regulate AI Services That Mimic Human Emotions

China’s internet regulator published draft rules to govern consumer AI that simulates personalities and engages users emotionally, aiming to curb addiction risks, protect data, and safeguard public order. The measures would impose disclosure, lifecycle safety, algorithm governance, and content controls, and could reshape how domestic and foreign firms operate AI in China.

On December 27, 2025 the Cyberspace Administration released a draft regulation for public comment that would tighten oversight of consumer facing artificial intelligence services that simulate human personality traits and interact with users on an emotional level. The measure, titled Interim Measures for the Administration of Anthropomorphic Interactive Services Using Artificial Intelligence, targets products and services offered to the public in China that present simulated thinking patterns communication styles or emotional responsiveness across text image audio and video formats.

The draft frames the policy as an ethics and safety intervention to manage a range of risks from rapidly spreading anthropomorphic AI. It would require providers to make clear disclosures so users know when they are interacting with software rather than a person. Services would also be obliged to include warnings and prompts aimed at preventing excessive use and to take steps to intervene when users show signs of addiction or unhealthy emotional dependence.

Beyond user facing warnings the draft assigns providers lifecycle safety responsibilities spanning design development deployment and operation. Companies would need to establish systems for algorithm review and governance and implement measures for data security and protection of personal information. The draft also conditions the launch of human like features on completion of security assessments designed to address national security and public order concerns. Providers would be required to report to regulators once certain user thresholds are reached, though the draft text released for comment did not specify the numerical triggers for those reporting obligations.

Regulators cast the measures as an attempt to balance innovation with social protection as AI capabilities expand into companionship and therapeutic style interactions. Authorities cited psychological risks including addiction and emotional dependence as drivers of the proposal along with traditional concerns over data misuse and potential impacts on national security and public order. By placing obligations on companies at each stage of the product lifecycle Beijing is signaling an intent to treat anthropomorphic interactive AI not simply as software but as a social instrument requiring continuous oversight.

The draft leaves several important details unresolved. The public notice did not set out the length of the comment period or a timetable for finalizing the rules. It also did not specify enforcement mechanisms or penalties for noncompliance and it was unclear whether the provisions would extend explicitly to foreign firms whose services are accessible in China. Major technology companies that operate large language models or virtual companion services have not released formal responses to the announcement.

Implementation of these measures could reshape product design and market access for AI services aimed at personal interaction. Developers may need to redesign interfaces to emphasize transparency and build monitoring systems to identify problematic user behavior. For users the rules could mean clearer signals about when emotion seeking interactions are machine mediated and more safeguards against overreliance on artificial companions.

The draft is open for public comment and is the latest move in a broader regulatory push by Beijing to govern digital platforms and emerging technologies. Observers will be watching how quickly the measures are finalized and how regulators interpret obligations for algorithm governance and cross border providers.

Sources:

Know something we missed? Have a correction or additional information?

Submit a Tip