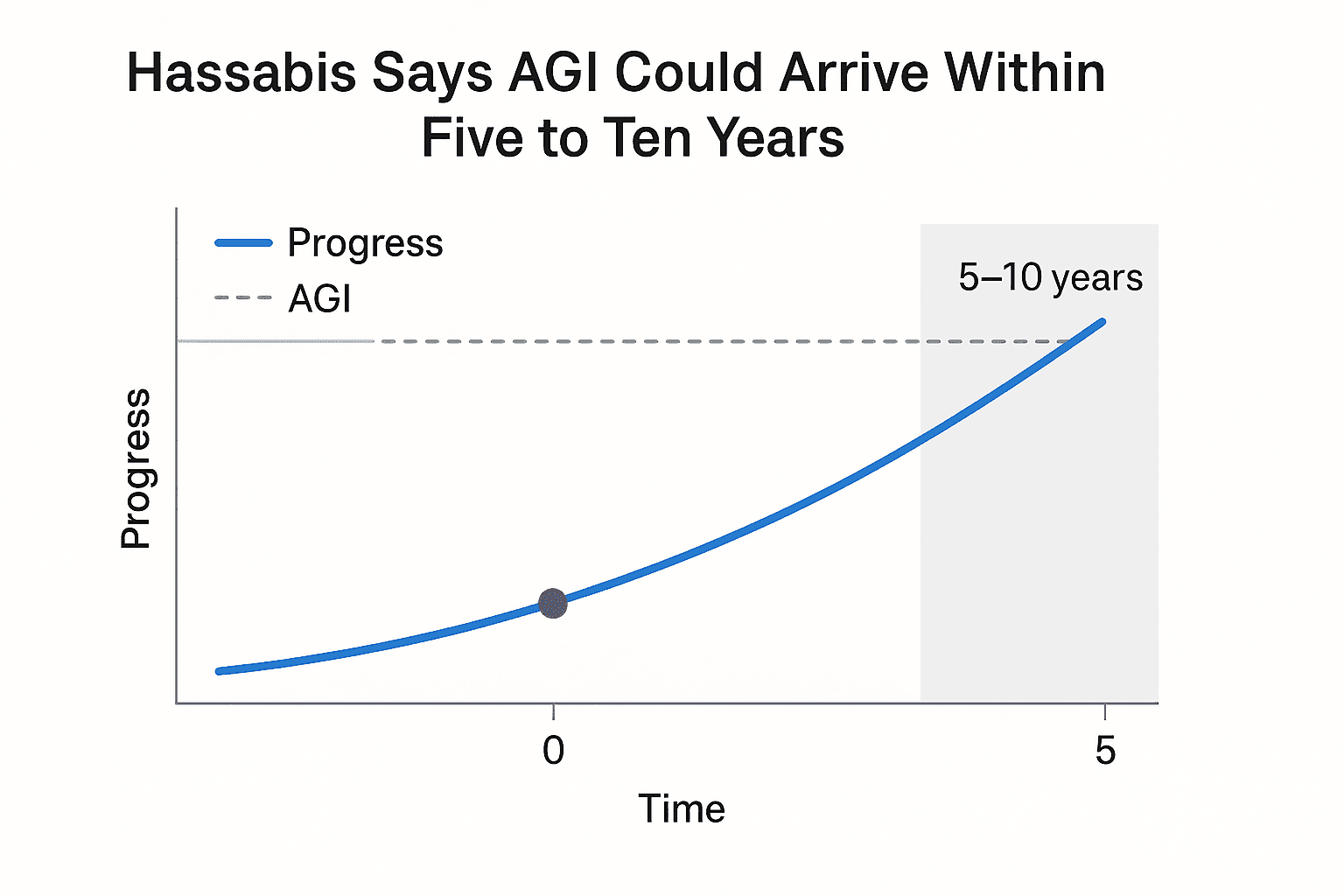

Hassabis Says AGI Could Arrive Within Five to Ten Years

At Axios' AI Plus Summit in San Francisco, Google DeepMind CEO Demis Hassabis tells an audience that artificial general intelligence is on the horizon, and could emerge within the next five to ten years. He frames AGI as potentially "Transformative" for humanity while warning that immediate, real world risks such as cyberattacks on energy and water infrastructure demand urgent mitigation.

At Axios' AI Plus Summit in San Francisco today, Google DeepMind Chief Executive Demis Hassabis said artificial general intelligence, systems that meet or exceed broad human cognitive abilities, is on the horizon and could arrive within the next five to ten years. He described the moment as potentially "Transformative" for human society and pointed to recent progress, including Google's Gemini model family, as evidence of a clear trajectory toward more general capabilities, while noting that one or two further breakthroughs beyond current scaling may still be necessary.

Hassabis singled out "world models" as a technical frontier to watch over the next 12 months. He described these systems as architectures that build internal simulations to predict and reason about physical reality, adding that progress in this area could accelerate the transition from narrow, task specific tools to agents that understand and manipulate complex environments in a more general way.

While sketching a near term timeline for AGI, Hassabis balanced his optimism with stark warnings about existing harms. He said that some dangerous uses of current AI are already real, and flagged cybersecurity threats targeting critical infrastructure as a pressing concern. He specifically pointed to attacks on energy and water systems as plausible scenarios that require immediate attention from both industry and regulators.

The remarks come as policymakers, corporate leaders and safety researchers debate how to govern rapidly advancing AI systems. Hassabis urged a coordinated approach, arguing that the dual challenge of unleashing powerful capabilities and guarding against catastrophic misuse demands both technical safety work and stronger public policy. He emphasized responsibilities for governments and industry to invest in resilience, detection and mitigation strategies for high consequence threats.

Technologists note that moving from large language models and multimodal systems to AGI will likely require advances in long range planning, robust world representation and the ability to generalize learning across tasks. The Gemini family represents one strand of that evolution, combining scale with multimodal inputs, but experts caution that scale alone may not deliver general intelligence without new algorithmic ideas and rigorous safety guardrails.

Public debate over timelines matters because it shapes where resources flow and how urgently regulators move. A five to ten year window compresses the policy horizon for emergency preparedness, national security planning and international coordination on standards and norms. It also concentrates the imperative for industry to harden services that control physical infrastructure and to share threat intelligence with public authorities.

Hassabis' assessment frames a clear paradox for the coming years: the same advances that promise sweeping societal benefits could, if left unchecked, enable attacks or accidents with systemic consequences. His call at the summit underscored the need for a sustained, cross sector effort to pursue innovation while building robust protections for critical systems and the public they serve.