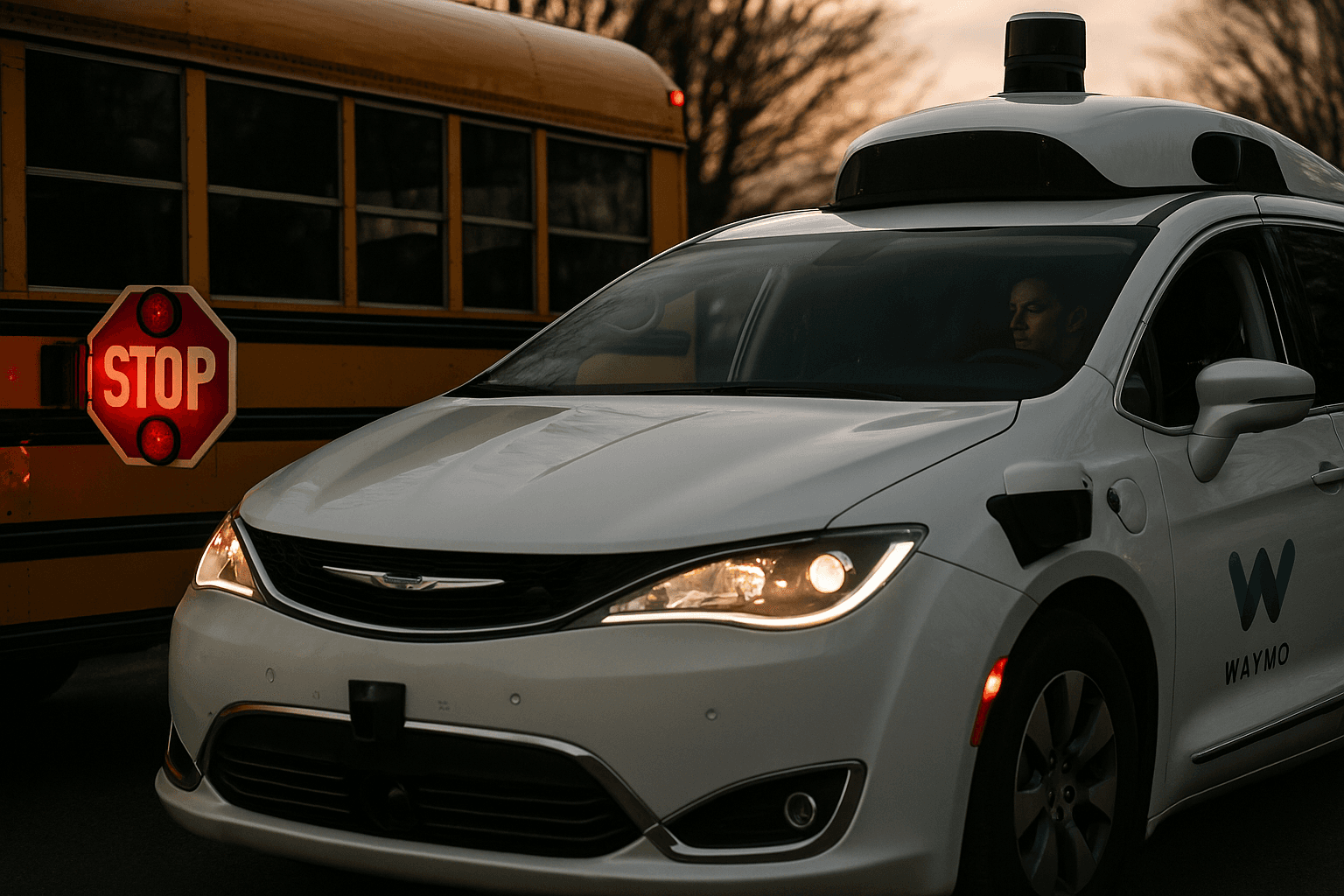

NHTSA Expands Inquiry into Waymo Over School Bus Violations

Federal safety officials broadened their review after reports that Waymo autonomous vehicles passed stopped school buses with extended stop arms, a violation that can put children at risk. The inquiry could force software changes, operational limits near schools, or a recall, raising fresh questions about how robotaxis handle critical safety scenarios.

The U.S. National Highway Traffic Safety Administration on December 5 expanded an investigation into incidents in which Waymo autonomous vehicles allegedly passed stopped school buses displaying extended stop arms. Local authorities, including a Texas school district, have reported multiple citations and flagged at least one episode in which a Waymo vehicle passed a stopped bus while children were disembarking, prompting the federal review.

NHTSA said the broadened inquiry seeks detailed information about Waymo’s software and operational practices. Regulators are asking whether recent software updates may have altered how vehicles interpret school bus signals or react to roadside cues. The agency is also probing whether Waymo can or should suspend operations near schools during morning pickup and afternoon drop off times and whether a recall or software remedy is warranted to address systemic risk.

The investigation marks a significant escalation for a company that has positioned its autonomous taxis as a safer alternative to human drivers. Waymo has said it is cooperating with the inquiry and has pointed regulators to company safety metrics that show reductions in certain types of crashes. The company’s statement framed those data as part of an overall safety record even as investigators examine the specific incidents involving school buses.

Local school officials and state and municipal regulators have urged swift fixes and heightened caution, citing the particular vulnerability of children at bus stops. Passing a stopped school bus with an extended stop arm is a traffic violation in most jurisdictions because it exposes children to crossing traffic. Authorities say the reports involving Waymo vehicles underline the stakes for software decision making when automated systems interact with complex and variable school zone environments.

Technical questions at the center of the probe are familiar to engineers who design perception stacks and decision logic for automated vehicles. Sensors must reliably detect bus-mounted stop signs and moving children, classification algorithms must interpret the context, and higher level rules must mandate safe behavior even when sensor inputs are ambiguous. Regulators will be looking for whether software policy thresholds, sensor calibration, or operational parameters left gaps that allowed the vehicles to proceed when stopping was required.

The inquiry also raises broader policy and social issues. Autonomous vehicle deployments rely on public trust and clear regulatory expectations, especially where children and other vulnerable road users are concerned. If investigators determine that software or operational policies materially increased risk, NHTSA could require remedies that range from software patches to an official recall that compels more extensive changes across vehicle fleets.

As the federal review proceeds, school districts and municipal governments are grappling with how to maintain safe pickup and drop off routines. For companies, the episode is a reminder that real world edge cases in urban environments can outpace assumptions made during testing. How quickly Waymo and regulators identify and implement fixes will have implications not only for immediate safety around schools but also for the future pace of autonomous vehicle deployment nationwide.