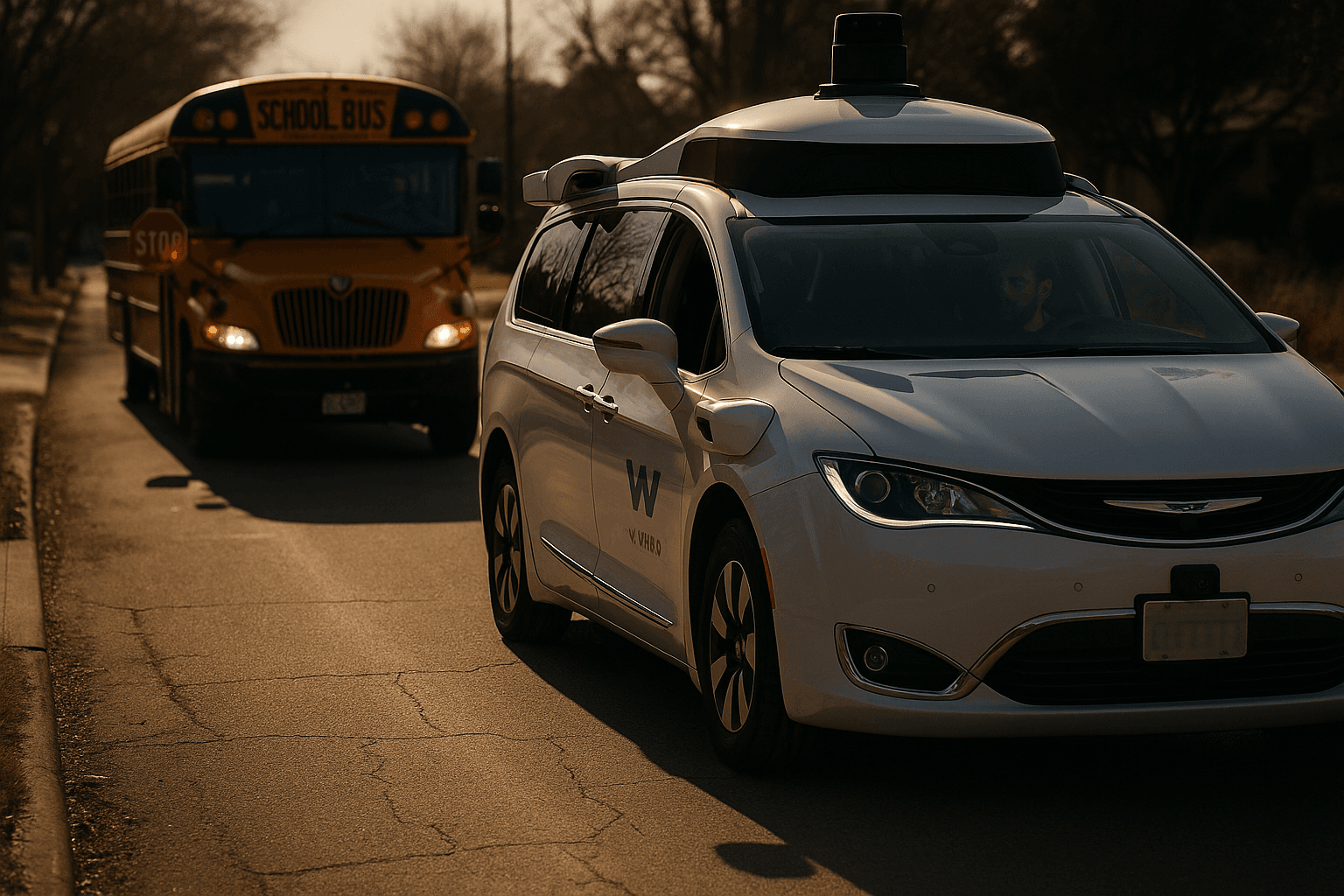

Waymo Recalls 3,067 Vehicles After School Bus Software Failure

The National Highway Traffic Safety Administration has announced that Waymo recalled 3,067 vehicles after a software defect in its 5th generation automated driving system caused some cars to drive past stopped school buses, increasing crash risk. Waymo deployed a software update and told Reuters that all affected vehicles were repaired by November 17, but the regulator's action underscores growing scrutiny of autonomous vehicle safety.

The National Highway Traffic Safety Administration announced today that Alphabet unit Waymo has recalled 3,067 vehicles in the United States due to a software defect in its 5th generation automated driving system that could cause the vehicles to drive past stopped school buses. The agency said the flaw raised the risk of collisions with children entering or exiting school buses and triggered the formal recall notice to address the safety hazard.

According to Reuters, Waymo pushed a software update and informed the news service that all affected vehicles were repaired by November 17. The recall made public by the regulator formalizes what Waymo described as a completed remediation, while drawing attention to the kinds of edge case failures that can occur in machine learning based driving systems. The NHTSA notice describes the defect as a software issue tied to the automated system's behavior around stopped school buses, a scenario that traffic law and public expectations treat as one of the most critical safety moments for road users.

The recall is notable because it involves operational decisions made by autonomous software rather than mechanical parts. Automated driving systems must perceive a dynamic scene, classify objects, and take legally compliant and safe actions in real time. A failure to stop when school buses are loading or unloading reverses a clear safety priority and elevates the potential for serious harm. The NHTSA action signals that the federal regulator is prepared to move from investigation to recall where algorithmic behavior creates unacceptable risk.

Waymo, one of the most prominent developers of commercial autonomous vehicles, has long argued that its systems improve safety by reducing human error. The company said it implemented the update across the affected fleet before the regulator announced the recall. Nonetheless, the incident highlights the challenges of scaling automated driving across diverse road environments and the difficulty of proving safety in every conceivable situation.

Regulatory and industry observers say the episode will likely sharpen calls for clearer standards and more rigorous pre deployment validation. Lawmakers and safety advocates have pressed for more transparency on how autonomous systems are tested and how companies track and remediate failures. The recall may prompt NHTSA to increase oversight and could influence how cities and states evaluate permitting and deployment of autonomous services.

For consumers and communities, the takeaway is a reminder that software updates can resolve documented faults but do not eliminate the underlying complexity of autonomous driving. Public trust in these technologies depends on both rapid fixes when problems arise and stronger assurances that systems have been tested against high risk scenarios. As Waymo and other companies push forward with broader rollouts, regulators will be watching whether software driven vehicles can meet the safety expectations the public requires when children are on the road.

Sources:

Know something we missed? Have a correction or additional information?

Submit a Tip