Cloudflare Says Internal Parsing Change Caused Brief Outage, Services Restored

Cloudflare traced a short December 5 outage to an internal change to body parsing logic, saying the issue was not a cyber attack and services were restored within 25 minutes. The incident affected roughly 28 percent of the companys HTTP traffic, underscoring risks of rapid global configuration changes at critical internet infrastructure providers.

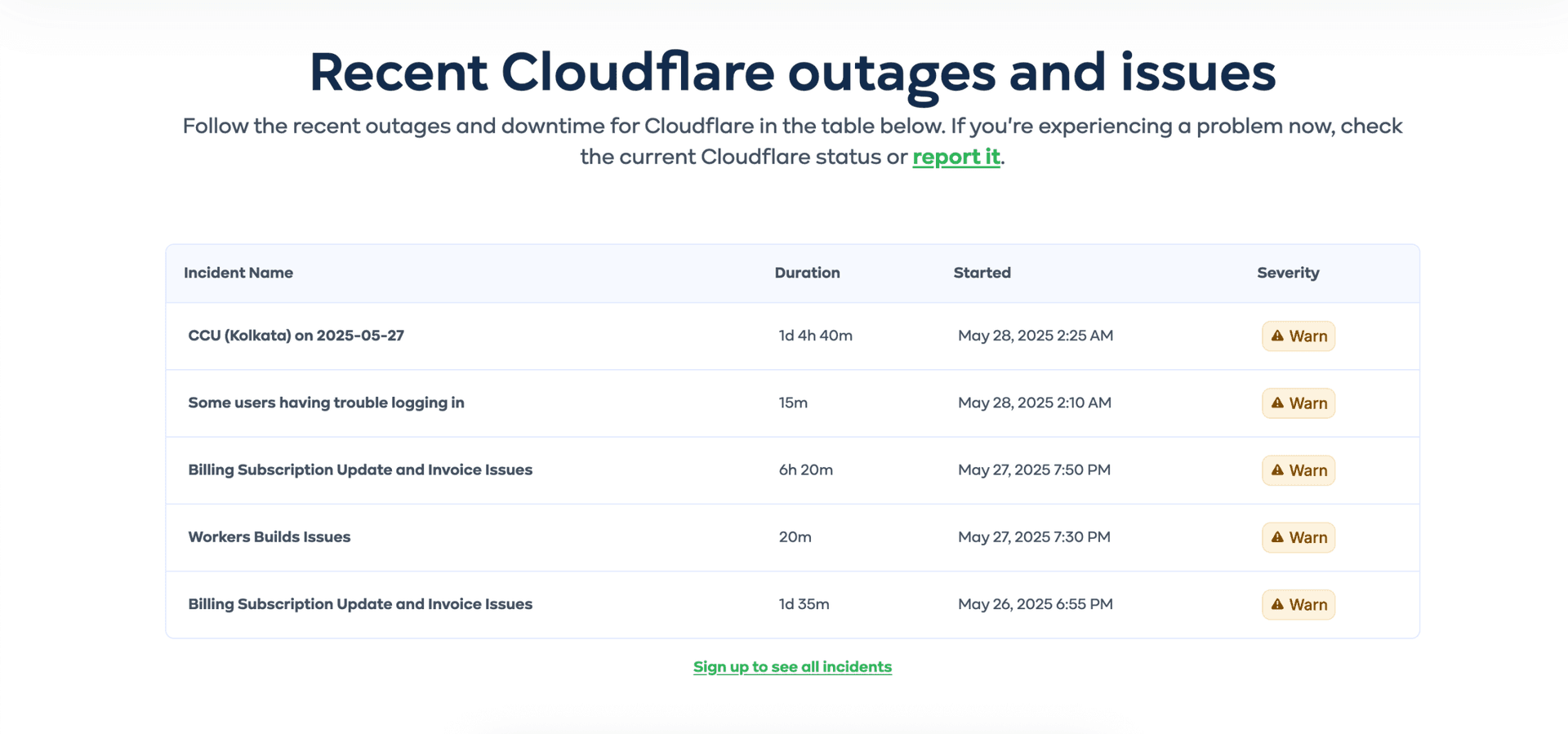

A portion of Cloudflares global network experienced failures on December 5 that left some customer sites returning server errors for roughly 25 minutes. The company reported the incident began at 08:47 UTC and was fully resolved at 09:12 UTC. While the disruption was brief, it affected about 28 percent of HTTP traffic Cloudflare serves and temporarily interrupted access to high profile customers including Coinbase and Anthropic's Claude AI, according to news reports.

Cloudflare said in a blog post that the outage was not caused by a cyber attack. Instead engineers traced the problem to a recent change to body parsing logic that had been implemented to mitigate a disclosed vulnerability in React Server Components. The change increased an internal buffer size and disabled an internal testing tool. That disablement was deployed through Cloudflares global configuration system, and the rapid propagation triggered a bug in older proxy software known as FL1. The interaction produced HTTP 500 errors across affected customer sites.

Engineers identified the offending change and reverted it, restoring normal traffic and service delivery. Cloudflare apologized to customers and pledged to publish additional technical details on the incident and on projects intended to improve system resilience. The company said it would immediately pursue enhanced rollouts and versioning for configuration changes, strengthen "break glass" capabilities to allow faster targeted interventions, and add fail open error handling to limit the blast radius of similar issues.

The sequence highlights a central tension for internet infrastructure providers. Rapid fixes to mitigate newly discovered vulnerabilities are important for security, but global configuration systems that propagate changes quickly can amplify unintended consequences if older or unexpected software components are present in the path. In this case the interaction was with legacy proxy software, not a flaw in customer code, but the result was widely visible as sites returned server errors.

Markets reacted to the disruption. Cloudflare shares fell in premarket trading after news of the outage, according to Reuters and other outlets. Cloudflares move to lock down certain classes of configuration changes while it implements fixes is aimed at reassuring customers and investors that future incidents will be less likely to cause broad interruptions.

The event will likely prompt customers and industry observers to press for clearer change management and testing practices in services that act as gateways for web traffic. For enterprises that rely on third party edge providers for performance and security, the outage is a reminder that operational dependencies and heterogeneous network software stacks can create unexpected fragility.

Cloudflares promise to publish more detailed postmortem material is intended to help engineers and customers understand both the technical root cause and the governance changes the company will adopt to reduce the chance of a repeat. Until those details are released, the episode stands as a concise lesson in the tradeoffs between speed and stability at the infrastructure layer of the modern internet.